Angular Smart.FileUpload - Integrating With Azure Face API

In this tutorial we will show how to configure the Angular Smart.FileUpload to send images directly to the Azure Face API and then consume the received data from the service.

The cloud-based Face API provides access to a set of advanced face algorithms which enable the detection and identification of faces in images.

Sending Files to the Face API

Intercepting Smart.FileUpload POST Request

Smart.FileUpload sends the data in post requests as FormData while the Azure Face API expects the image files to be passed as an ArrayBuffer. Because if this, we need to transform the format of the data sent to the server. We will intercept the FileUpload request to change the format using a FileReader for transforming to an ArrayBuffer. We will intercept the request using the following method inside the OnInit lifecycle hook of our component:

ngOnInit(): void {

((() => {

const origOpen = XMLHttpRequest.prototype.send;

XMLHttpRequest.prototype.send = function(data: any) {

if (typeof data !== 'string') {

const file: File | null | string = data!.get('userfile[]');

const reader = new FileReader();

reader.onload = ({target}) => {

const arrayBuffer = target!.result;

arguments[0] = arrayBuffer;

// @ts-ignore

origOpen.apply(this, arguments);

}

if (file) {

reader.readAsArrayBuffer(file as File);

}

}

};

}))();

}

Request Configuration

Another thing we need to configure is the request url as well as the headers. For setting them we will use the uploadUrl and setHeaders properties of the FileUpload, like this:

uploadUrl = 'https://{{YOUR-FACE-API-RESOURCE-NAME}}.cognitiveservices.azure.com/face/v1.0/detect?returnFaceId=true&returnFaceLandmarks=false&returnFaceAttributes=headPose&recognitionModel=recognition_03&returnRecognitionModel=false&detectionModel=detection_03&faceIdTimeToLive=86400';

setHeaders = function(xhr :any, file: any){

xhr.setRequestHeader("Content-Type", `application/octet-stream`);

xhr.setRequestHeader("Ocp-Apim-Subscription-Key", "{{YOUR-SUBSCRIPTION-KEY}}");

}

The different options for the Face API algorithm are passed as query parameters.

Response Handling

Lastly, we need to handle the Face API response. It analyzes the image, detects the available faces, and returns data in a JSON format.

First, we will bind to the fileSelected event of the FileUpload and will save the selected file in the selectedFile variable as Data Url again using the FileReader, like this:

fileSelected = (e: any) => {

let file = (document.querySelector('input[type="file"]')! as any).files[0];

const reader = new FileReader();

reader.onload = ({target}) => this.selectedFile = target?.result;

reader.readAsDataURL(file);

}

Then, we will use the responseHandler callback property of the FileUpload to read the response and display the received result:

responseHandler = (xhr: XMLHttpRequest) => {

if (xhr.status === 200) {

this.image = this.selectedFile;

this.faceAPIResponse = xhr.responseText;

}

}

Full Example

This is the full code of our component:

app.component.html:

<smart-file-upload [uploadUrl]="uploadUrl" [setHeaders]="setHeaders"

[responseHandler]="responseHandler" (fileSelected)="fileSelected($event)">

</smart-file-upload>

<h4>Image:</h4>

<img [src]="image" width="300" />

<div>

<h4 class="text-center">FaceAPI Response Data:</h4>

<div style="width: 300px; height: 300px;">

<pre>{{ faceAPIResponse }}</pre>

</div>

</div>

app.component.ts:

import { Component, OnInit } from '@angular/core';

@Component({

selector: 'app-root',

templateUrl: './app.component.html'

})

export class AppComponent implements OnInit {

selectedFile: any;

image: any;

faceAPIResponse: any;

ngOnInit(): void {

((() => {

const origOpen = XMLHttpRequest.prototype.send;

XMLHttpRequest.prototype.send = function(data: any) {

if (typeof data !== 'string') {

const file: File | null | string = data!.get('userfile[]');

const reader = new FileReader();

reader.onload = ({target}) => {

const arrayBuffer = target!.result;

arguments[0] = arrayBuffer;

// @ts-ignore

origOpen.apply(this, arguments);

}

if (file) {

reader.readAsArrayBuffer(file as File);

}

}

};

}))();

}

responseHandler = (xhr: XMLHttpRequest) => {

if (xhr.status === 200) {

this.image = this.selectedFile;

this.faceAPIResponse = xhr.responseText;

}

}

setHeaders = function(xhr :any, file: any){

xhr.setRequestHeader("Content-Type", `application/octet-stream`);

xhr.setRequestHeader("Ocp-Apim-Subscription-Key", "{{YOUR-SUBSCRIPTION-KEY}}");

}

uploadUrl = 'https://{{YOUR-FACE-API-RESOURCE-NAME}}.cognitiveservices.azure.com/face/v1.0/detect?returnFaceId=true&returnFaceLandmarks=false&returnFaceAttributes=headPose&recognitionModel=recognition_03&returnRecognitionModel=false&detectionModel=detection_03&faceIdTimeToLive=86400';

fileSelected = (e: any) => {

let file = (document.querySelector('input[type="file"]')! as any).files[0];

const reader = new FileReader();

reader.onload = ({target}) => this.selectedFile = target?.result;

reader.readAsDataURL(file);

}

}

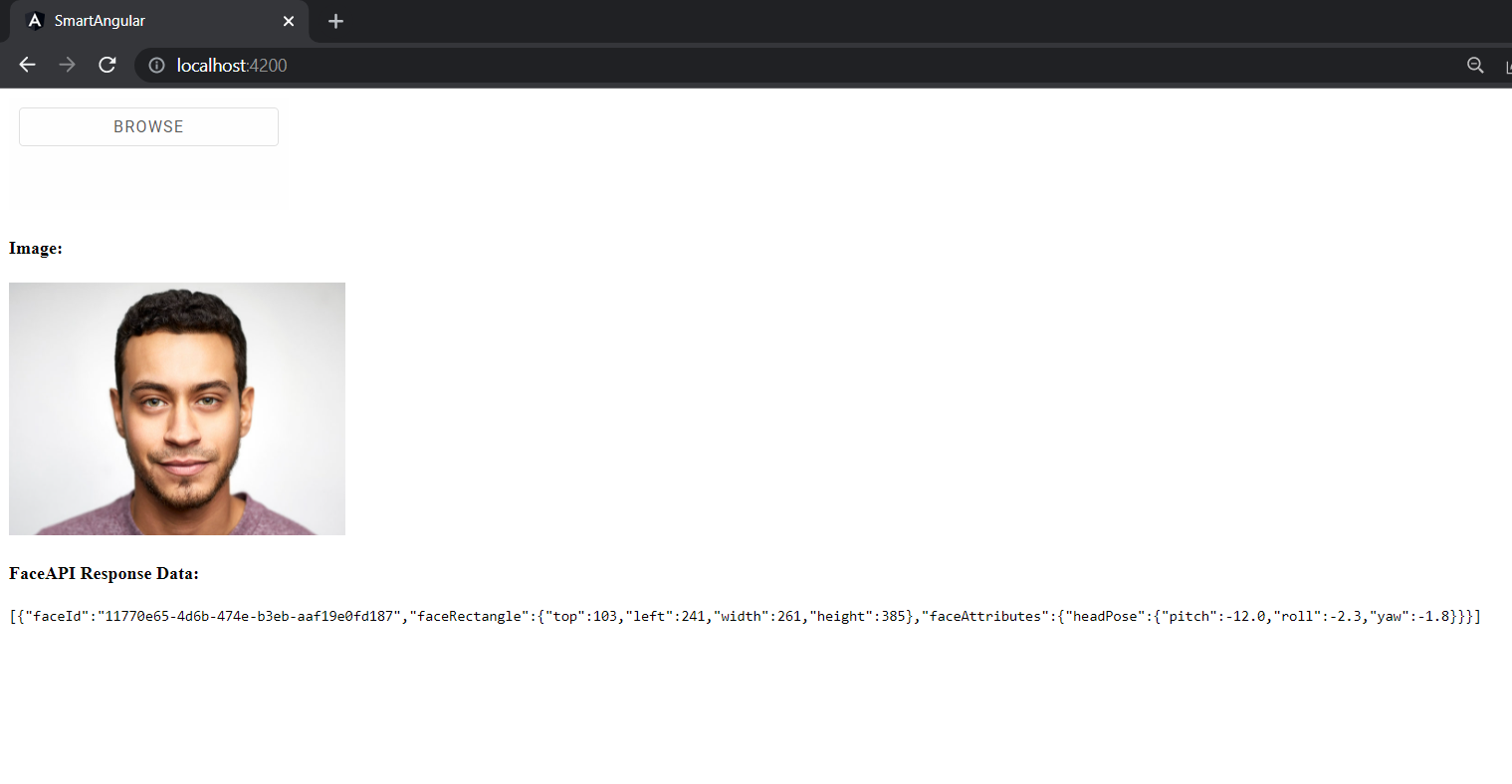

Result: